What is a firmware?

3D printers are computers, just like most other things these days. They have to have some sort of logic to tell them what to do. This is where firmware comes in. The most common firmwares these days are

- Marlin

- Klipper

- RepRap

Most of the factory produced printers will most likely come with Marlin firmware, which seems to be the industry standard. Marlin is great as a firmware in many ways. It's well supported by slicers, most printers use it out of the box, it probably is tuned specifically for your printer to make life better, the list goes on. So why is the title of this "Klipper and you" if Marlin is so great?

Klipper

Klipper is an up and coming firmware for 3D printers with features that were never possible before. Unlike most firmware, it splits the duty of processing everything. The controller that normally runs marlin gets turned into a stupid device that only takes very basic low level commands to drive stepper motors, and that's it. So where does the real work happen? On a Raspberry Pi. A raspberry pi is much faster than anything you could ever build a 3D printer with, including the most expensive driver boards. Some of the most notable features of Klipper that aren't found on Marlin are

Pressure advance

Pressure advance is an advanced way to calculate the assumed pressure in the nozzle, and react accordingly. This prevents the oh so common oozing out of the nozzle that affects things like stringing, and other imperfections in prints, even when printing slowly. Not everyone only prints slowly, so comes in the next feature.

You can read more about it here.

Input Shaping

It's no secret that things moving fast will vibrate, and resonate. That resonance shows up in your prints if moving fast enough. The usual fix is to stiffen up your printer, which is always a great first step, but eventually you just can't get it better without changing the design. In comes this feature. It uses the massive processing power of the raspberry pi to predict the resonance of the device after calibration, and cancel it out before it gets sent as instructions to the printer. This lets you reach much higher speeds without seeing the artifacts in your prints.

You can read more about it here.

The bad part

Klipper is not all sun and rainbows. Prusa stock firmware at least had some nice features. A simple step by step Z offset tuning, simple filament change setting, and the screen on the printer actually did something (not a Klipper problem, just not supported on the Prusa Mini on Klipper). I think that it may be a massive step up for many on printers like the Ender 3 to get much better prints, even at stock speeds, but there is a learning curve. It's not hard, but it is a project. The kind people in the Klipper discord are wonderful, and the docs are stunning, not to mention the amount of YouTube coverage the firmware has on high end printers such as the Voron series of printers. If you prefer Marlin, it's not like you can't go back, so I would say that Klipper is worth a shot if you have the time, or like to tinker.

Conclusion

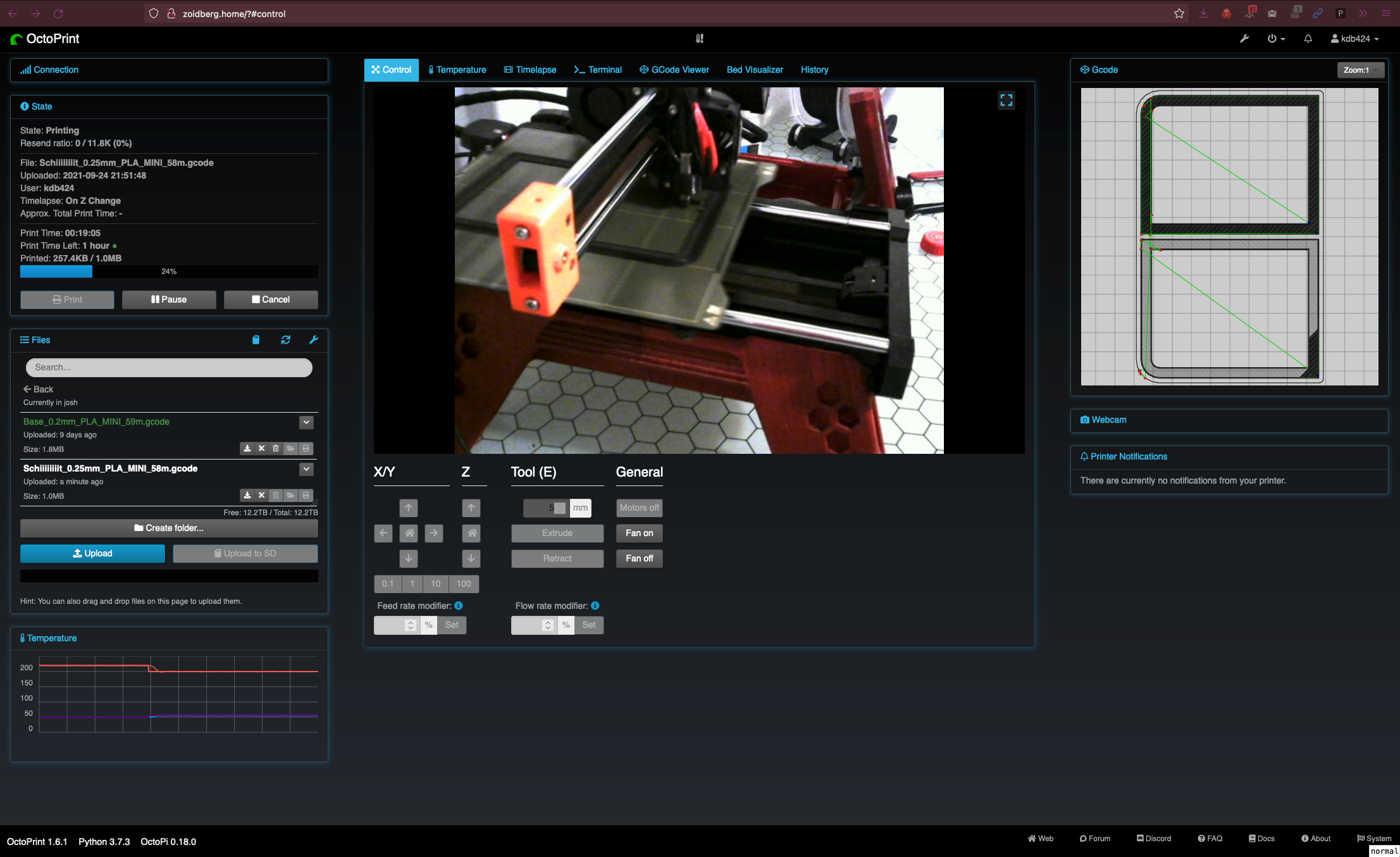

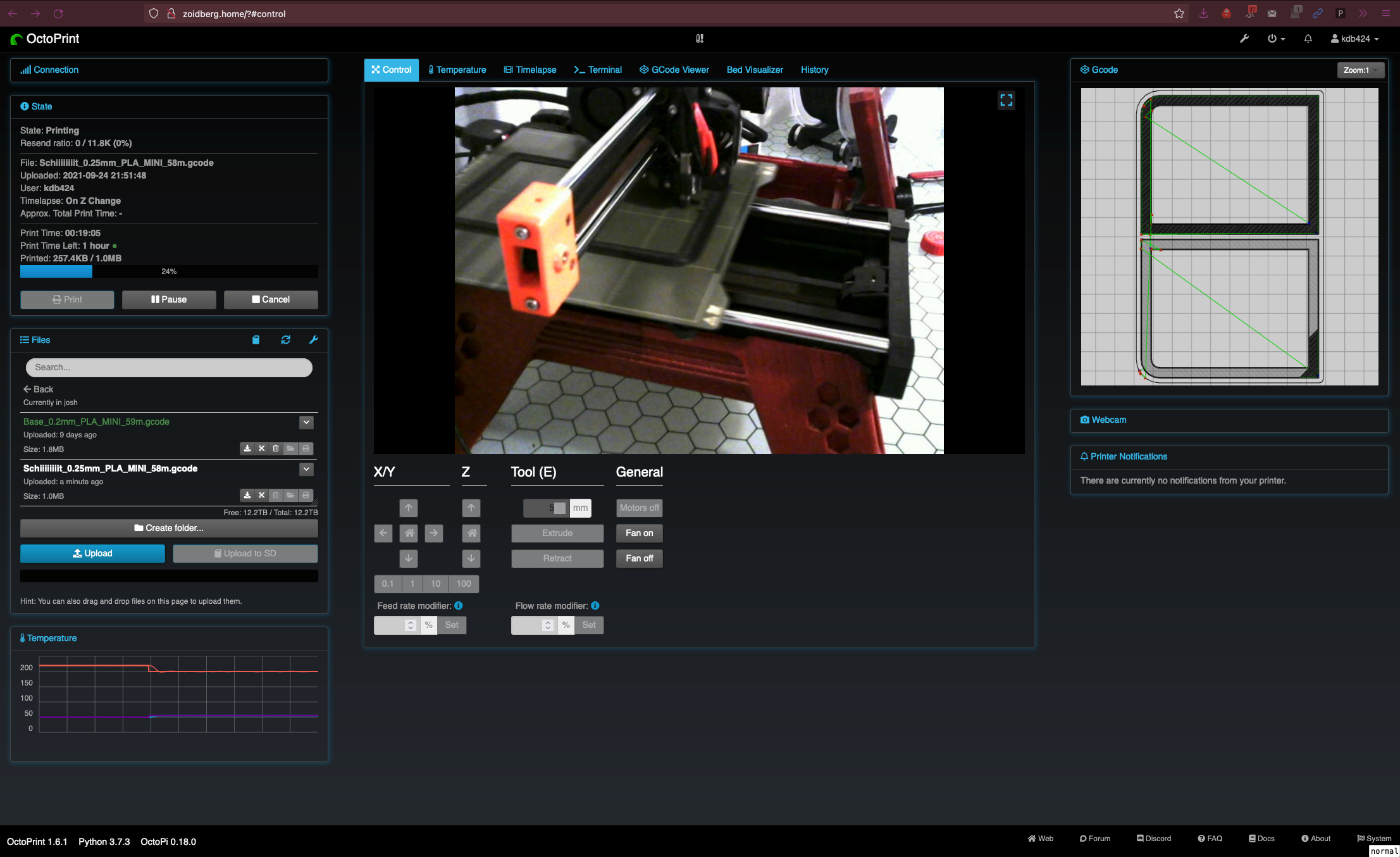

While klipper may not have features that your stock Marlin does, it's open source, always getting better, has great community support, and has multiple front ends, and isn't limited to Octoprint. I'll be talking more about my fore into my experience with this firmware in a future article about flashing it to my Prusa Mini to give it a test drive, and why I did it. I'll leave some notes below for myself, and probably others that stumble onto this, and wonder how to do simple things that you may need to relearn coming from a nice UI like Marlin on the Prusa series of printers at least.

Notes

I'll come back and edit this as self reference, as well as possibly helping others that are as confused as I was.

Z offset calibration

- Home the printer

PROBE_CALIBRATE- Do the paper test

ACCEPT