All computers need to give you access to files. This seems quite obvious at

first, but how those files get stored, most people don't seem to think about.

Files need to be stored on a disk (or a network, but lets focus about on disk),

and that disk needs a way to know where files are, how big they are, ect. This

is all part of a filesystem. Some common ones that people may know about are

- NTFS

- FAT32

- EXT4

- HFS+

- APFS

These are just a few examples that can be found on different operating systems,

and you are bound to recognize at least one.

Different filesystems are built with different goals, or operating systems in

mind. As a quick example, HFS+ was built before SSD's existed, and is optimized

for spinning disk drives. That doesn't mean you can't use it on a solid state

drive, but the performance could be better. This is what brought rise to APFS

for Mac. It's built with only SSD's in mind. Once again, you can use this on

spinning disk drives, but it won't perform as well as HFS+.

Another big area that filesystems are optimized for is features. More modern

filesystems may offer things like on disk compression to save space while losing

no data, permissions, to prevent users from accessing, modifying, or running

files that they aren't allowed to, and much more. Not all filesystems are

created equally, and each has upsides and downsides.

Why explain what a filesystem is if ZFS is not one? Well, ZFS is not just a

filesystem. It includes a filesystem as a component, but is far more. I won't

explain all of the features it offers here, but some of the more useful ones

that I take advantage of.

RAID is a complex topic, so I'll only get into the basics here. It allows you to

use more than 1 disk (SSD, Spinning disk, ect), all as one logical drive. There

are many solutions to RAID, from hardware backed raid cards, to software in your

BIOS/EFI, to

LVM.

One of the main drawbacks of hardware RAID is that if your raid card dies, you

lose your data without an exact replacement for the RAID card. ZFS on the other

hand allows you to keep your data, and as long as enough of the disks show up,

the data is there. ZFS also allows some other special types of RAID, that will

be talked about later that aren't possible with traditional RAID without complex

layers of software needing set up on top of it. You can read a bit more about

ZFS vs Hardware RAID

here.

Storing lots of data means that sometimes combining multiple RAID types together

is more cost or performance efficient. A common RAID type is RAID 10. This is a

RAID 1 (mirror) with a RAID 0 on top of it. It would look something like this.

In ZFS, we call these sections of disks VDEV's. The above image would show 2

disks in each VDEV, and the stripe over all VDEV's is known as a "pool". Every

ZFS array has at least 1 pool, and 1 VDEV even if it's a single disk.

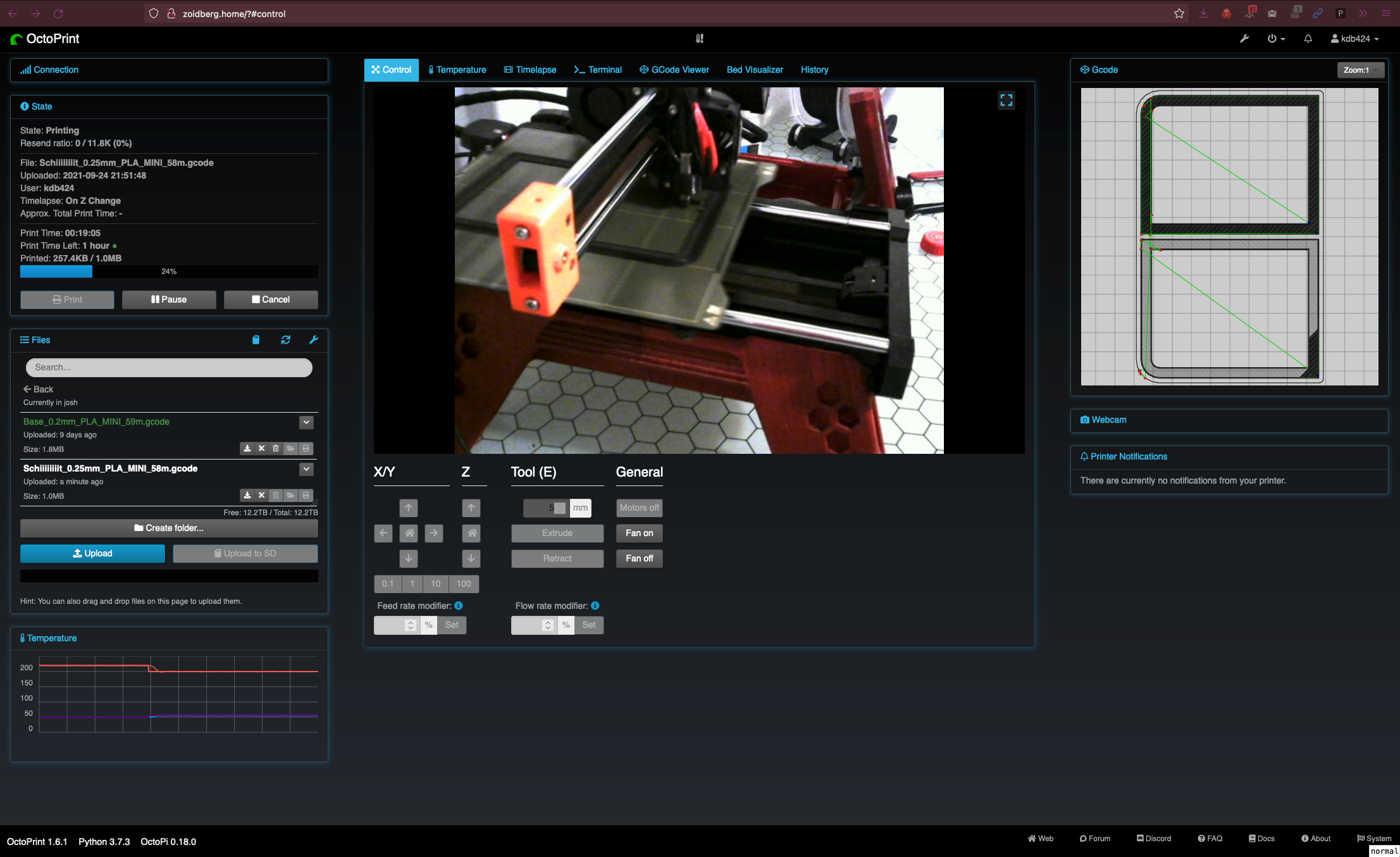

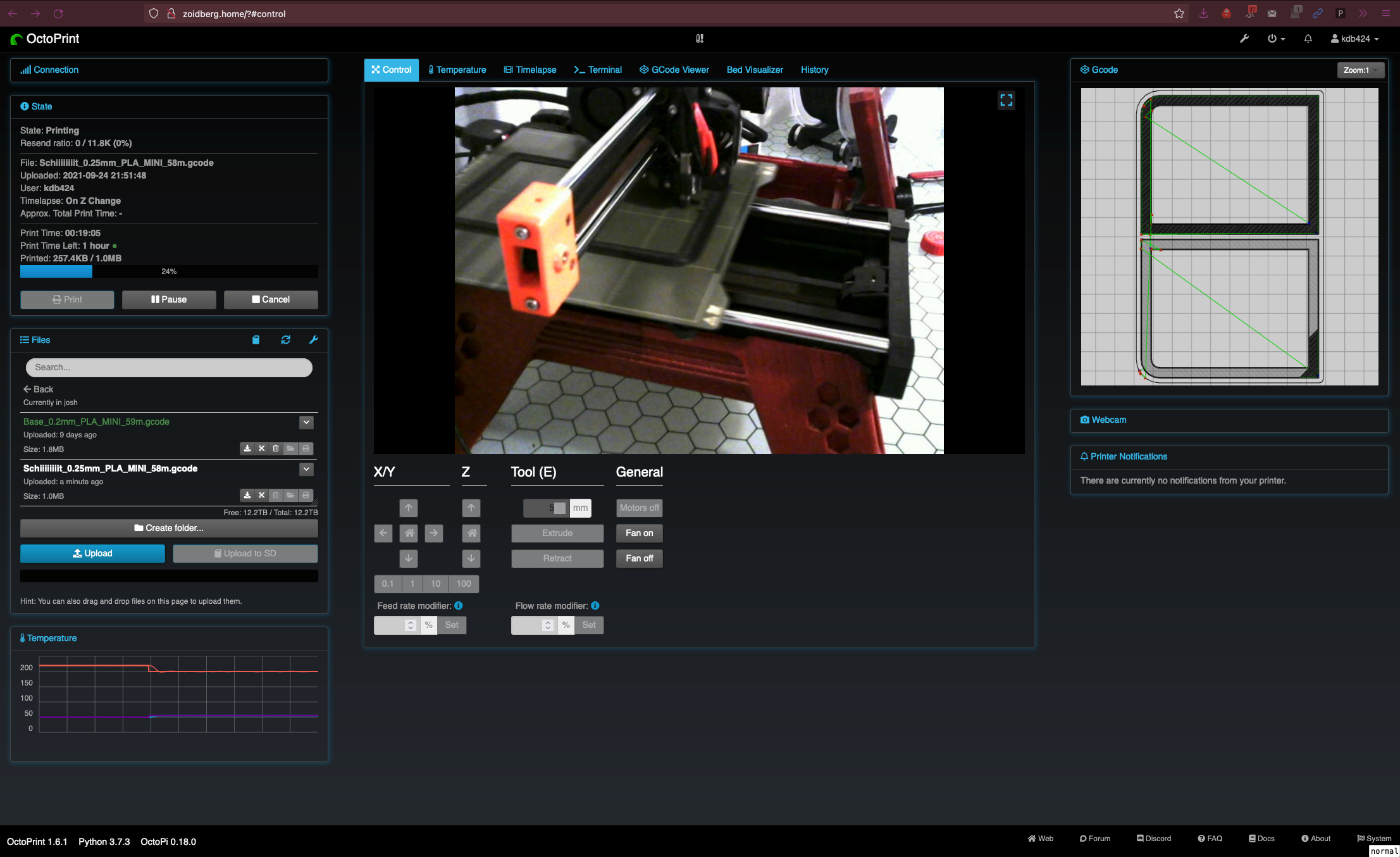

Here is an example of a ZFS root filesystem used in one of my servers.

╰─$ zpool list zroot -v

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 476G 199G 277G - - 14% 41% 1.00x ONLINE -

nvme0n1p2 476G 199G 277G - - 14% 41.7% - ONLINE

ZFS has some unique properties as far as filesysetms go. I won't list all of the

layers as some are optional, but I'll highlight a few of the important ones to

know about.

ZFS has a thing called

ARC that allows for

caching of things in RAM. This allows frequently accessed files to be accessed

much faster than from disk, even if the disk is a fast SSD as RAM is always faster.

This is an optional secondary ARC that can be stored on an SSD to speed up reads

when RAM is totally full. This is only used on massive arrays generally as ARC

is really efficient at storing what should be cached on smaller arrays, and has

some drawbacks as it takes up some RAM on it's own.

A ZIL is the ZFS Intent Log. This is where ZFS stores the data that it intends

to write, and can verify that it was written correctly before committing it to

disk. This is great in case of a power outage or a kernel panic stopping the

system in the middle of a write. If it wasn't written properly, the data won't

be committed to the disk, and there won't be corruption. This normally happens

on the same disk(s) of the filesystem, though some arrays add a special device

called a SLOG, which is usually an SSD to write these intents to, freeing up the

normal disks to only write good data. You can read further on this topic

here.

Special vdevs are a type of RAID that are unique to ZFS. ZFS keeps track of

files, and blocks by size. Small files and things like

metadata

are not where spinning disks are good, so this allows you to have a special vdev

made of SSD's to help take the burden of these types of files and blocks. This

has a massive increase in performance, while keeping over all storage cost low

as most of the bulk storage is handled by the slow spinning disk drives, but

using the SSD's where there are best.

This

is a fantastic read on the topic.

I could spend the rest of existence rambling about everything that ZFS can do,

so I'll leave a list of other features that are worth looking into.

These are the features that make ZFS the ultimate ecosystem, and not just a

filesystem for my NAS/SAN use case, as well as data protection for even my

single disks, allowing me to back up and restore quickly with snapshots, and

send/recv faster than any other method available. I've accidentally deleted TB's

of data before when targeting the wrong disk in a rm operation, only to

undelete the files in less than 5 seconds with a snapshot, moved countless TB's

over a network maxing out 10 gigabit speeds in ways that things like cp and

rsync could never get close to matching, and even torture tested machines by

pulling ram out of them while data was being sent just to see if I could cause

corruption, and found none (missing data that wasn't sent, but everything that

was sent was saved properly). This is unmatched on any other filesystem in my

opinion, including BTRFS, but that's a

rant for another day.

OpenZFS wiki

Wikipedia ZFS page

Below is an example of my array that is currently live in my SAN, serving

everything including this page. It consists of 3 10TB Spinning disks and 2 500GB

SSD's acting as an L2ARC as well as a special VDEV in a mirror.

╰─$ zpool list tank -v

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

tank 27.7T 8.71T 19.0T - - 0% 31% 1.00x ONLINE -

raidz1 27.3T 8.64T 18.6T - - 0% 31.7% - ONLINE

ata-WDC_WD100EMAZ-00WJTA0_1EG9UBBN - - - - - - - - ONLINE

ata-WDC_WD100EMAZ-00WJTA0_1EGG56NZ - - - - - - - - ONLINE

ata-WDC_WD100EMAZ-00WJTA0_2YJXTUWD - - - - - - - - ONLINE

special - - - - - - - - -

mirror 428G 70.5G 358G - - 9% 16.5% - ONLINE

sda5 - - - - - - - - ONLINE

ata-CT500MX500SSD1_2005E286AD8B - - - - - - - - ONLINE

cache - - - - - - - - -

ata-CT500MX500SSD1_1904E1E57733-part1 34.7G 31.2G 3.51G - - 0% 89.9% - ONLINE